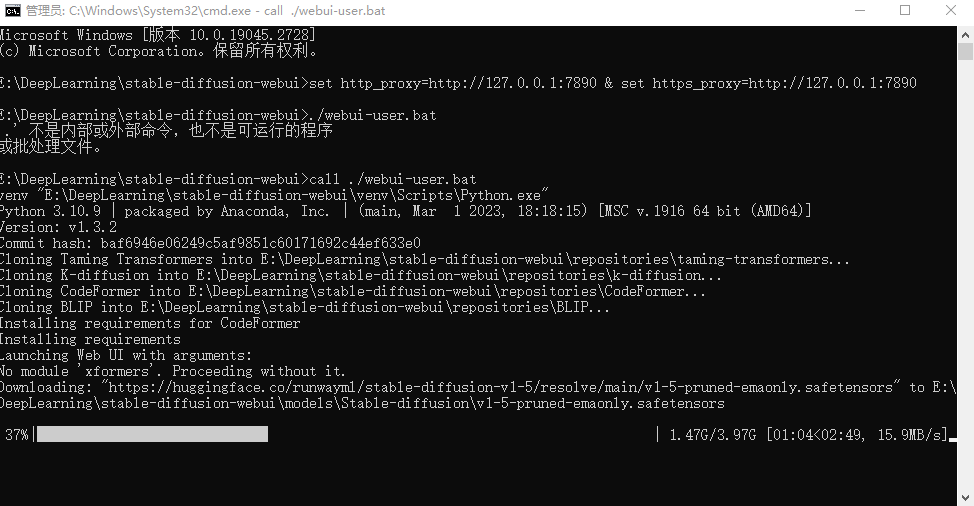

直接点击运行 webui-user.bat 文件报错:

Cloning Taming Transformers into E:\DeepLearning\stable-diffusion-webui\repositories\taming-transformers… Traceback (most recent call last): File “E:\DeepLearning\stable-diffusion-webui\launch.py”, line 38, in

main() File “E:\DeepLearning\stable-diffusion-webui\launch.py”, line 29, in main prepare_environment() File “E:\DeepLearning\stable-diffusion-webui\modules\launch_utils.py”, line 289, in prepare_environment git_clone(taming_transformers_repo, repo_dir(‘taming-transformers’), “Taming Transformers”, taming_transformers_commit_hash) File “E:\DeepLearning\stable-diffusion-webui\modules\launch_utils.py”, line 147, in git_clone run(f’”{git}” clone “{url}” “{dir}”‘, f”Cloning {name} into {dir}…”, f”Couldn’t clone {name}”) File “E:\DeepLearning\stable-diffusion-webui\modules\launch_utils.py”, line 101, in run raise RuntimeError(“\n”.join(error_bits)) RuntimeError: Couldn’t clone Taming Transformers. Command: “git” clone “https://github.com/CompVis/taming-transformers.git“ “E:\DeepLearning\stable-diffusion-webui\repositories\taming-transformers” Error code: 128 stderr: Cloning into ‘E:\DeepLearning\stable-diffusion-webui\repositories\taming-transformers’… fatal: unable to access ‘https://github.com/CompVis/taming-transformers.git/‘: Failed to connect to github.com port 443 after 21089 ms: Couldn’t connect to server

解决方法:

在当前文件夹下打开 cmd 配置代理后再运行当前文件夹下的 webui-user.bat 文件

1 | call ./webui-user.bat |

OutOfMemoryError: CUDA out of memory. Tried to allocate 67.91 GiB (GPU 0; 11.99 GiB total capacity; 2.58 GiB already allocated; 7.09 GiB free; 2.62 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

参考:https://huggingface.co/spaces/stabilityai/stable-diffusion/discussions/21

(80条消息) 目标检测图片resize下标注框box的变化方式_目标检测图像resize标签变化_Python图像识别的博客-CSDN博客

1 | User friendly error message: |

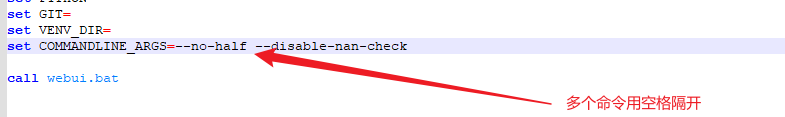

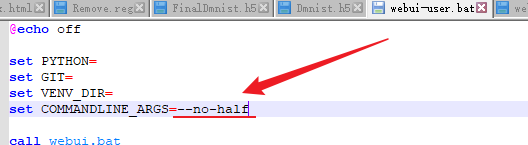

解决方法:

参考:简明 Stable Diffusion 本地部署(win) - 知乎 (zhihu.com)

1 | Error: 'A tensor with all NaNs was produced in Unet. Use --disable-nan-check commandline argument to disable this check.'. Check your schedules/ init values please. Also make sure you don't have a backwards slash in any of your PATHs - use / instead of \. Full error message is in your terminal/ cli. |