EN

Projects

Traffic Statistics and Trajectory Tracking Based on YOLOv5 and Deep Sort

https://blog.csdn.net/JulyLi2019/article/details/124047020A Nice Project: https://github.com/mikel-brostrom/yolo_tracking 5.3K Star

Issues and Solutions:

To use detector(im) to get the value of dets, you need an instance of an object detector that should be able to accept an image (im) as input and output bounding boxes, confidence scores, and class labels for the detected objects. If you already have a working object detector, you can replace the hardcoded dets value.

Generally, you need to follow these steps:

1. Import Your Detector

You may need to import some libraries or modules, depending on the object detector you are using. For example, if you are using a YOLO detector, you might need to import related libraries or modules.

1 | from my_detector import MyDetector # Modify according to your actual situation |

2. Initialize the Detector

Before starting the loop, you need to create an instance of the detector. Usually, you need to provide some parameters, such as the path to the model weights, device type, etc.

1 | detector = MyDetector(…) |

3. Run the Detector and Get Detection Results

In the main loop, you need to run the detector with the current frame im as input and then get the dets.

1 | dets = detector(im) |

Below is a hypothetical complete code snippet, assuming detector(im) returns a NumPy array with the same shape as the hardcoded dets:

1 | from my_detector import MyDetector # Modify to your actual import |

Note:

- The

detectorshould be an object that can take an image input and output detection results.detector(im)should return an array containing the bounding boxes, confidence scores, and class labels of detected objects. - In the actual code, you might need to process the detector’s output to match the input requirements of the

DeepOCSORTtracker.

YOLOv5+Deepsort for Vehicle and Pedestrian Tracking and Counting: https://github.com/Sharpiless/Yolov5-deepsort-inference

DeepSort Target Tracking, ROI Counting, Drawing Trajectories: https://codeantenna.com/a/vaIQfAGKoj

Multi-Object Tracking Principles

Multi-Object Tracking (MOT)–DeepSort Principles: https://aitechtogether.com/article/40878.html

Main improvements over Sort: Utilizes ReID model to extract appearance semantic features, adding appearance information; introduces matching cascade and trajectory confirmation (Confirmed, Tentative, Deleted).

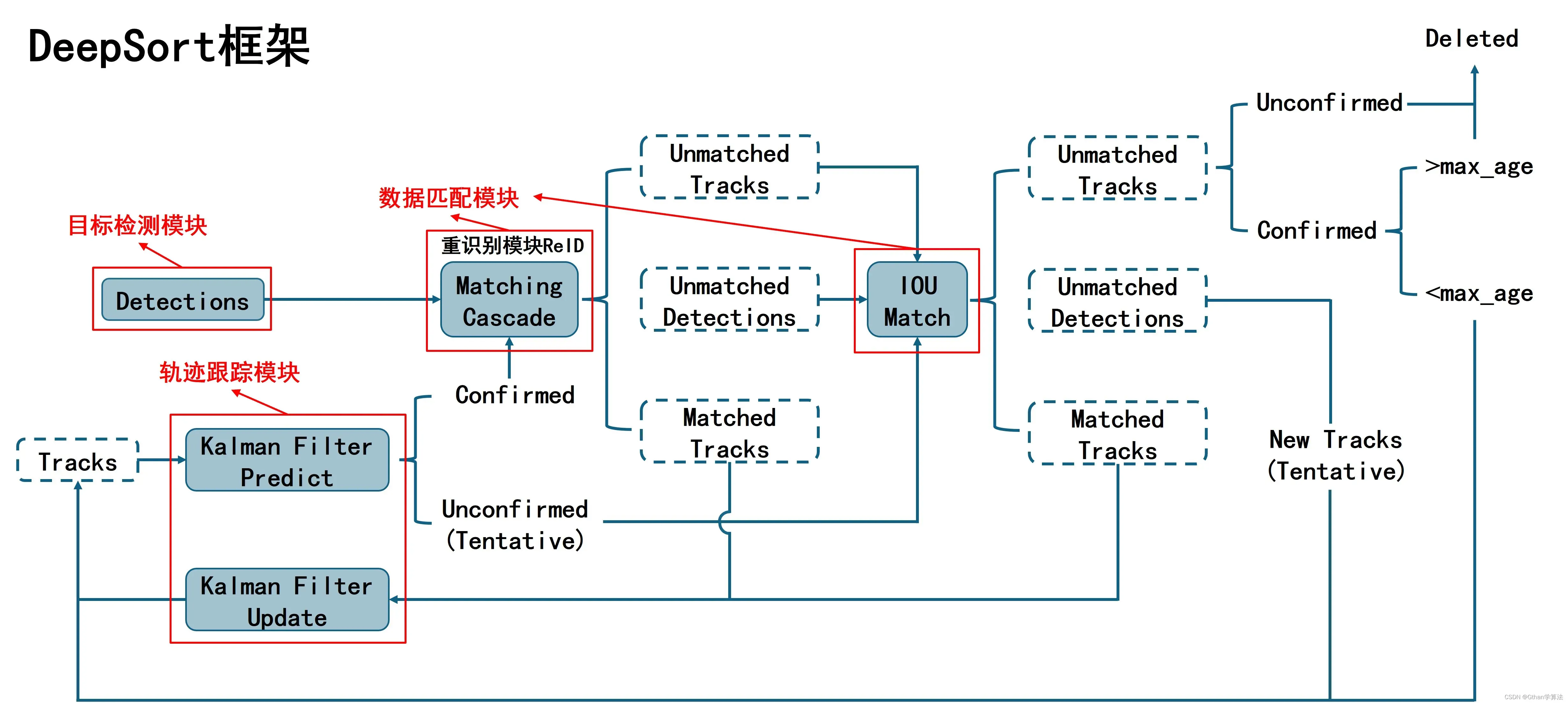

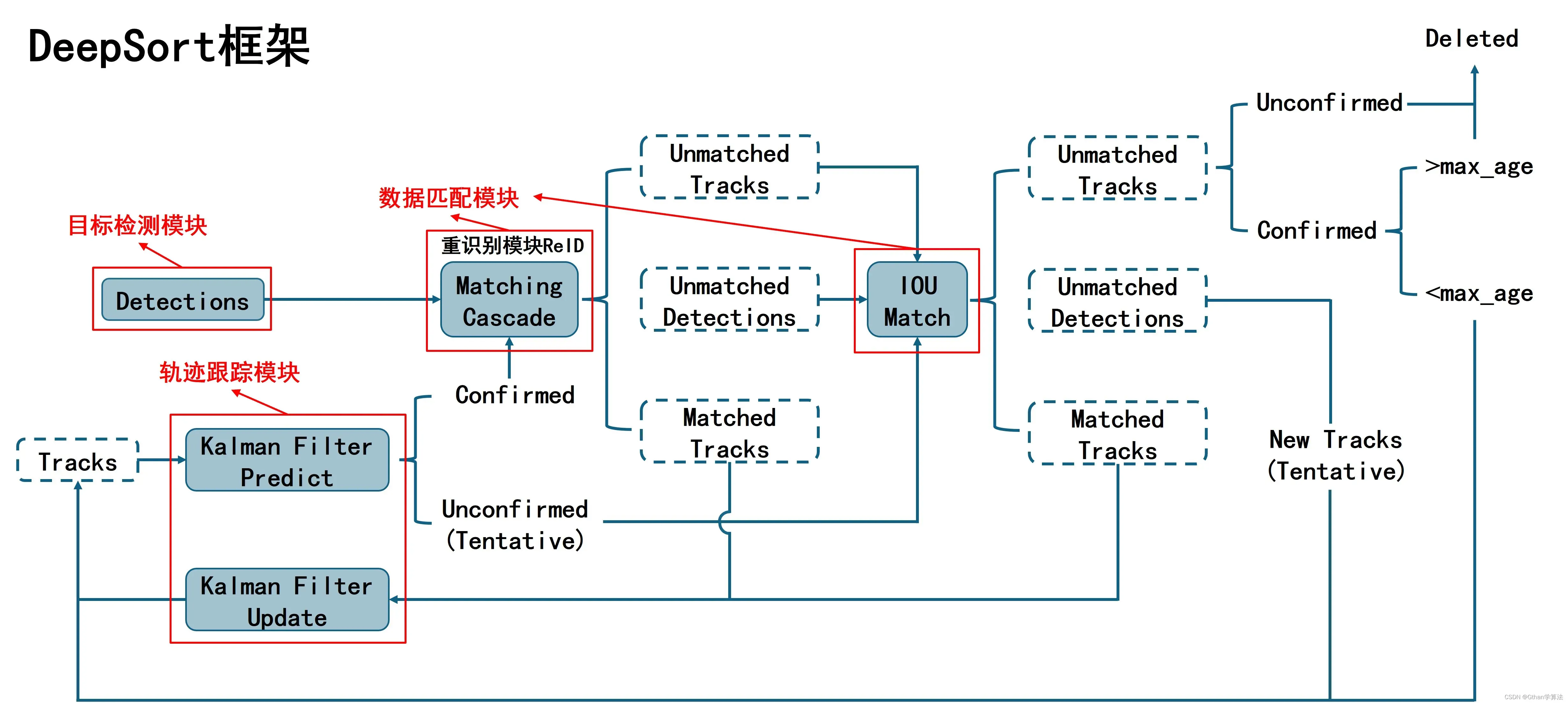

DeepSort Main Modules:

- Object Detection Module: Obtains target frames in each input frame through the object detection network.

- Trajectory Tracking Module: Uses Kalman filtering for trajectory prediction and update, obtaining new trajectory sets.

- Data Matching Module: Associates trajectories and target frames through matching cascade and IOU matching.

DeepSort Main Process: Detector gets target frames in the current video frame -> Kalman filter predicts the trajectory set for the next frame based on the current frame’s trajectory set -> Predicted trajectories are matched with target frames in the next frame -> Kalman filter updates successfully matched trajectories.

Process Analysis

The entire process is summarized as follows:

- Initialize the target frames of the first frame to the corresponding trajectories for Kalman filter prediction for the next moment, with the initial trajectory state being uncertain.

- Perform matching cascade between confirmed trajectories of the previous moment and the detected target frames of the current moment. The results of the matching cascade with failed trajectories and failed target frames are used for subsequent IOU matching. Successfully matched trajectories and target frames undergo Kalman filter prediction and update.

- Perform IOU matching between the failed trajectories and target frames from the matching cascade and the uncertain trajectories from the previous frame. If the failed trajectories are still in an uncertain state or are in a confirmed state but have exceeded the maximum allowed continuous matching failures, they are deleted; otherwise, they undergo Kalman filter prediction. The failed target frames initialize corresponding trajectories for Kalman filter prediction. Successfully matched trajectories and target frames undergo Kalman filter prediction and update.

- Repeat steps 2 and 3 until the end.

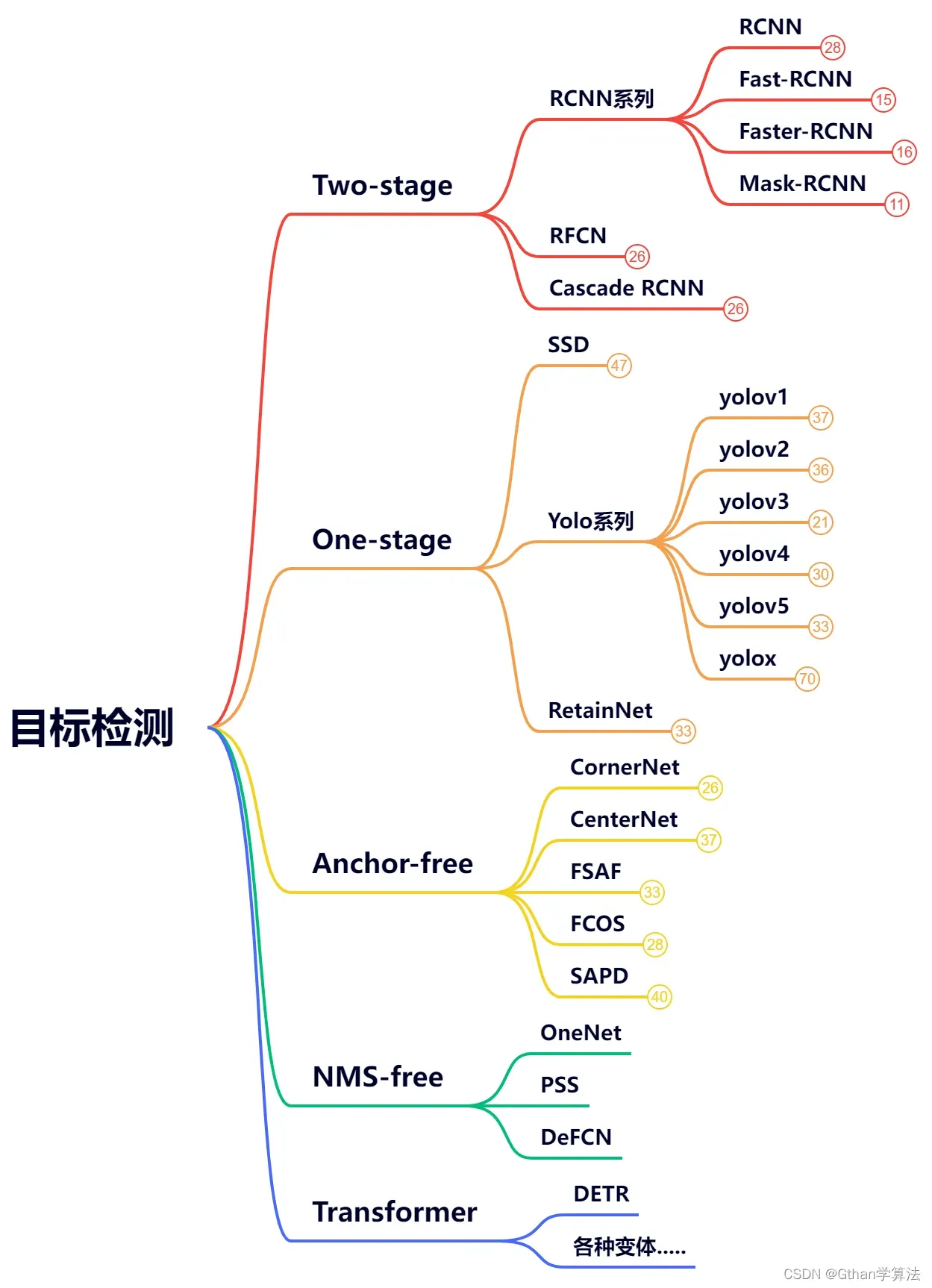

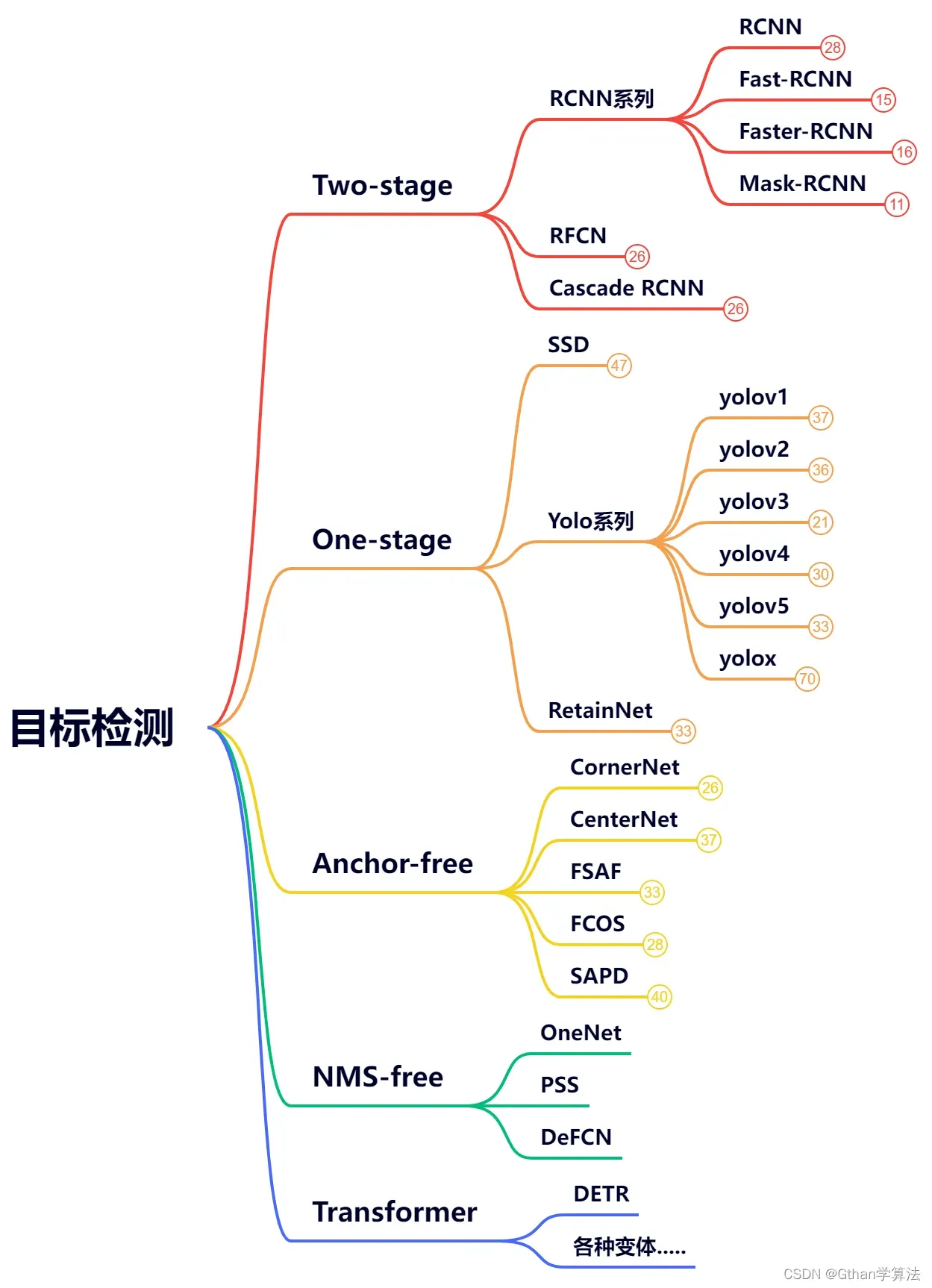

Object Detection Model Overview

The development route of deep learning-based object detection models has been summarized:

Starting with the Two-stage models based on Anchor-based which evolved into One-stage models due to their slow detection speed. However, the design of anchors involved hyperparameters that were difficult to cover all target sizes. To ensure certain accuracy, a large number of anchors were used, consuming computational resources and memory.

Later, models based on Anchor-free methods were proposed, detecting key points (centers) to solve the problems of anchors, significantly optimizing the number of model hyperparameters. Additionally, most object detection models required non-maximum suppression (NMS) post-processing for prediction results. NMS-free models were proposed to cancel NMS post-processing, improving detection speed and achieving true end-to-end detection.

Currently, Transformer architectures have achieved a series of successes in the vision field (ViT, Swin Transformer). Transformer-based object detection models have shown superior performance and can naturally eliminate manually set Anchor-based and NMS post-processing, making them excellent end-to-end detectors.

Trajectory Tracking Module

The trajectory tracking module is mainly completed through the Track class and the Tracker class, with specific functions as follows:

- Track Class: Stores the status and information of a single trajectory and is responsible for the prediction and update of a single trajectory.

- Tracker Class: Stores the status and information of all trajectories (sets), responsible for the initialization of trajectories and the prediction and update of all trajectories (sets), encapsulating the data matching module.

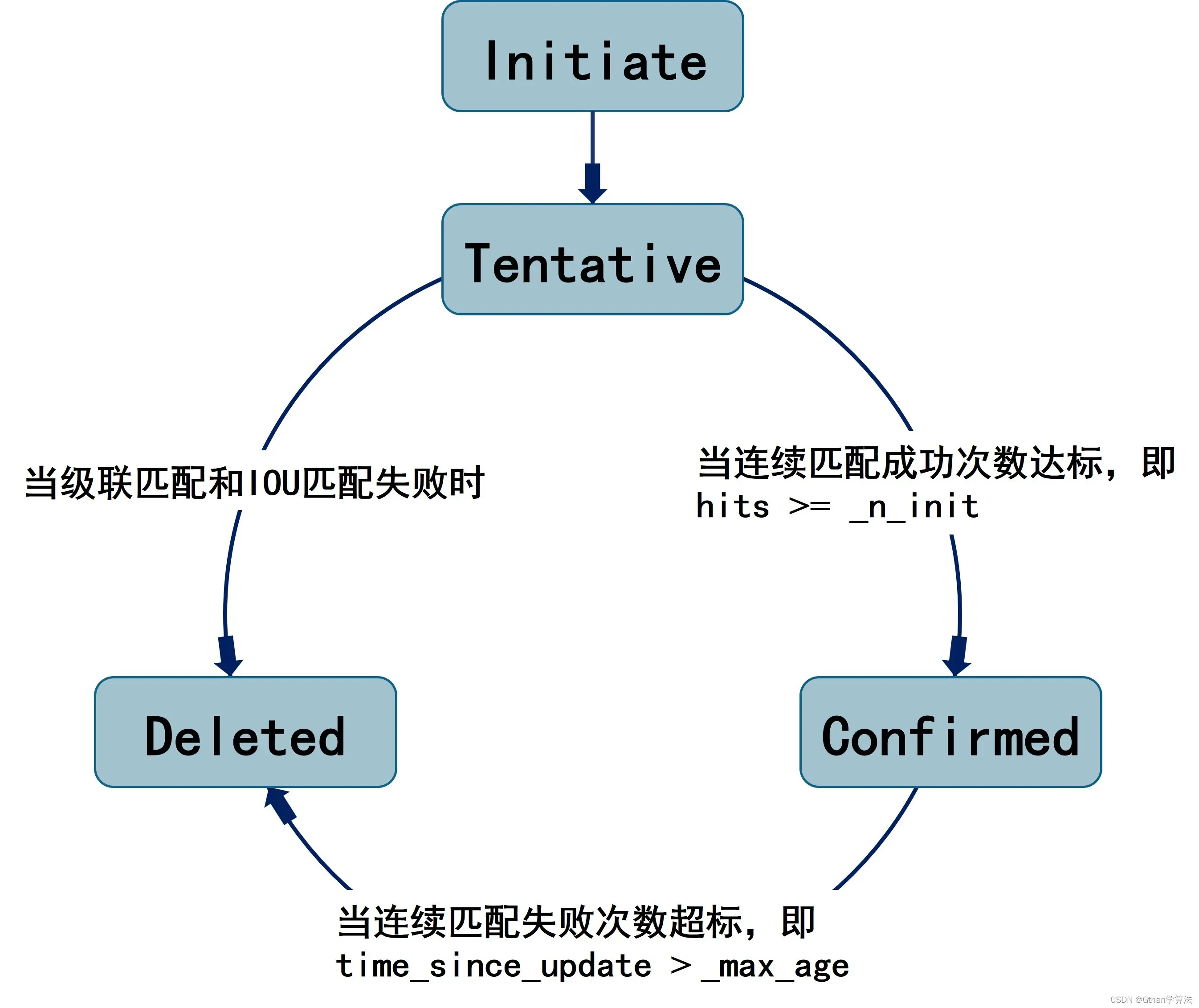

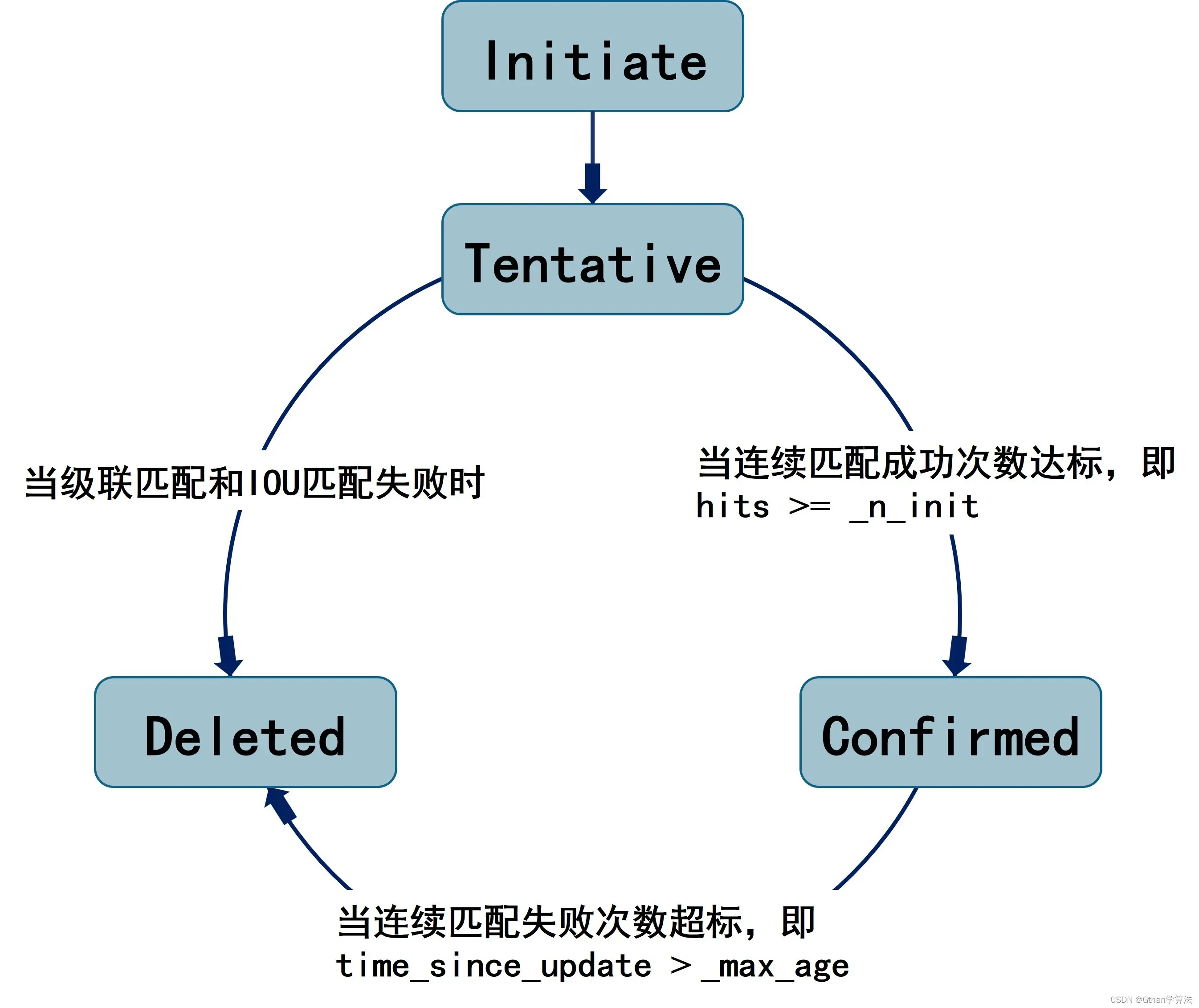

Track Class

The Track Class is the basic unit of a trajectory, storing the position and velocity information of the trajectory through mean and covariance, using Kalman filtering to predict and update the trajectory information; it also defines three states (Tentative, Confirmed, Deleted) and the conversion relationships between these states. The three states are as follows:

Tentative State: Represents the initial (default) state of the trajectory. When updating, if the trajectory matches successfully for a consecutive number of times (hits) +1, it converts to the Confirmed state after reaching the specified number of successful matches (_n_init defaults to 3). If the trajectory remains in the Tentative state after matching cascade and IOU matching, it is considered unmatched with any target and converts to the Deleted state.

Confirmed State: Represents the state of a successfully matched trajectory. When predicting but not updating, if the trajectory fails to match consecutively (time_since_update) +1, it converts to the Deleted state after reaching the maximum allowed consecutive matching failures (_max_age defaults to 70).

Deleted State: Represents the invalid state of the trajectory. The trajectory is deleted from all trajectories (sets).

Tracker Class

The Tracker Class is the core part of the DeepSort framework, integrating the information and status of all trajectories and predicting the trajectory information through Kalman filtering one by one. As the trajectory set updating process involves the data matching module, the update function of the Tracker class encapsulates matching cascade and IOU matching, updating the status and information

of all trajectories in the trajectory set based on the matching results combined with Kalman filtering, and initializing the status and information of the trajectories for unmatched targets (including the first frame’s target frames). This part combines the matching cascade, IOU matching, and DeepSort framework diagram for reading.

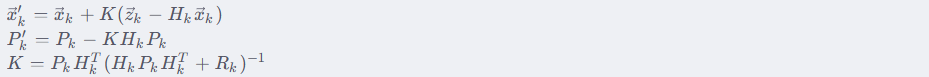

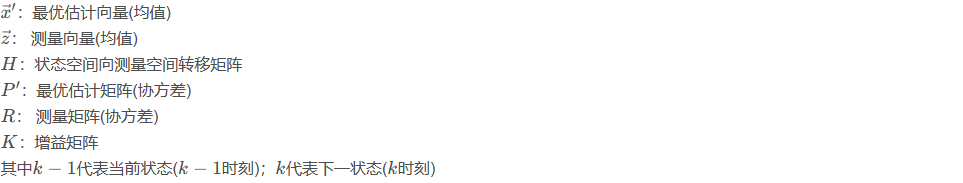

Kalman Filtering

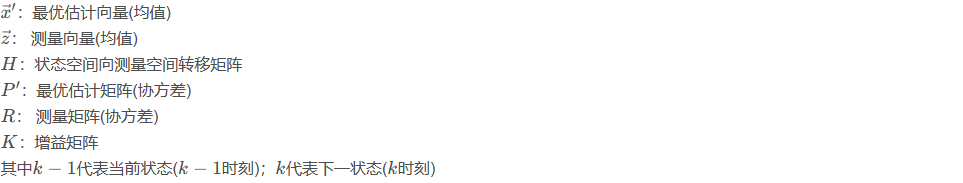

Kalman filtering mainly includes prediction and update. Prediction is based on the system’s current state to make a well-founded prediction of the system’s next state; Update combines measurement values and prediction values to calculate the optimal estimate of the system’s state. For details, refer to Kalman Filtering Principles Explained blog, which includes the following expressions:

Prediction:

Update:

Data Matching Module

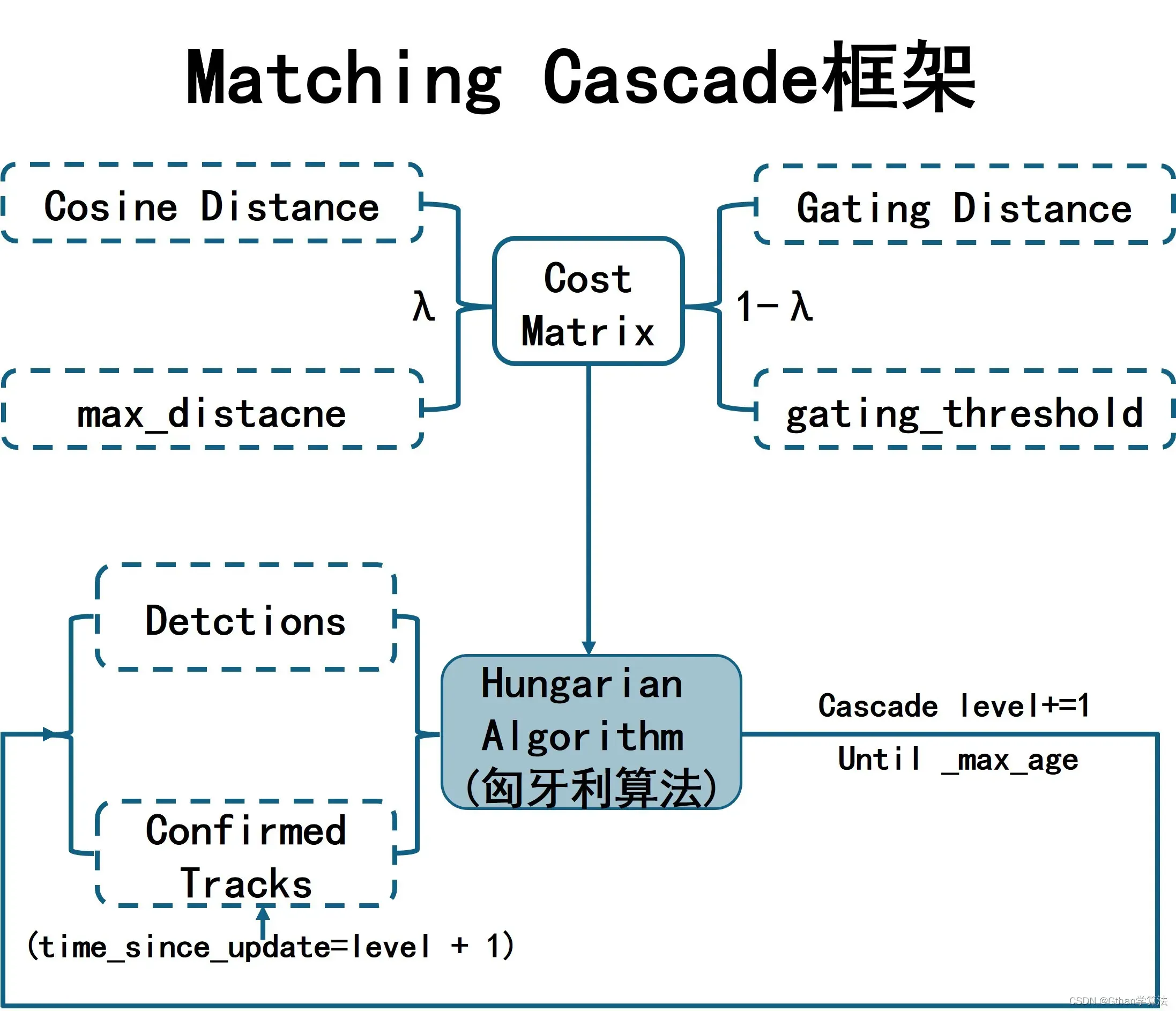

The data matching module mainly associates trajectories and target frames through matching cascade, IOU matching, and the Hungarian algorithm. Specific functions are as follows:

- Matching Cascade: Calculates similarity using appearance features (extracted by ReID module) combined with motion features, associating through the Hungarian algorithm.

- IOU Matching: Calculates IOU using the area of trajectories and target frames, associating through the Hungarian algorithm.

ReID Module

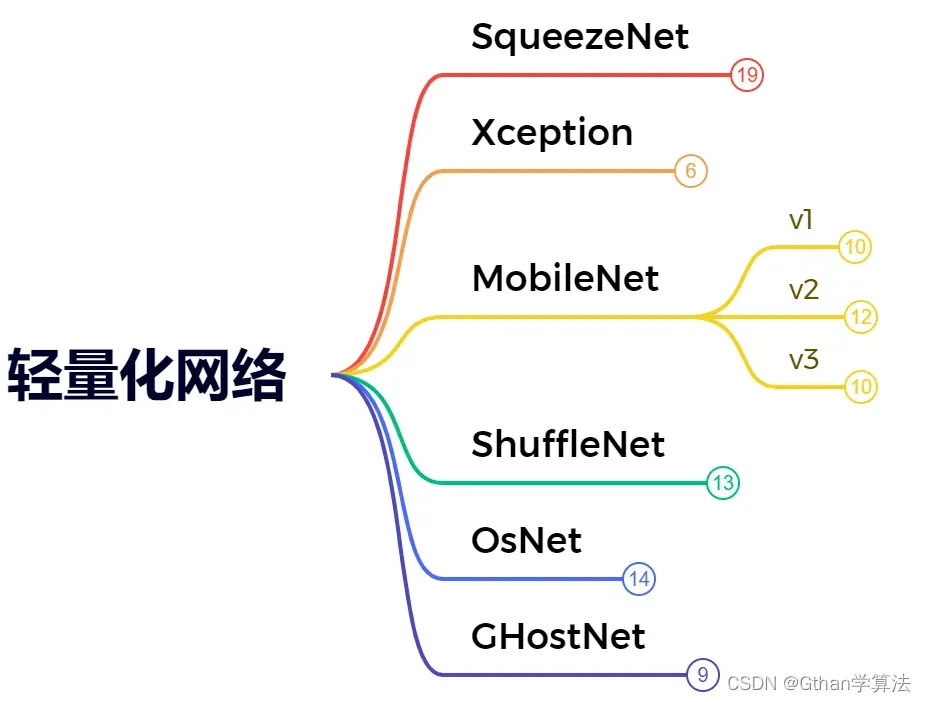

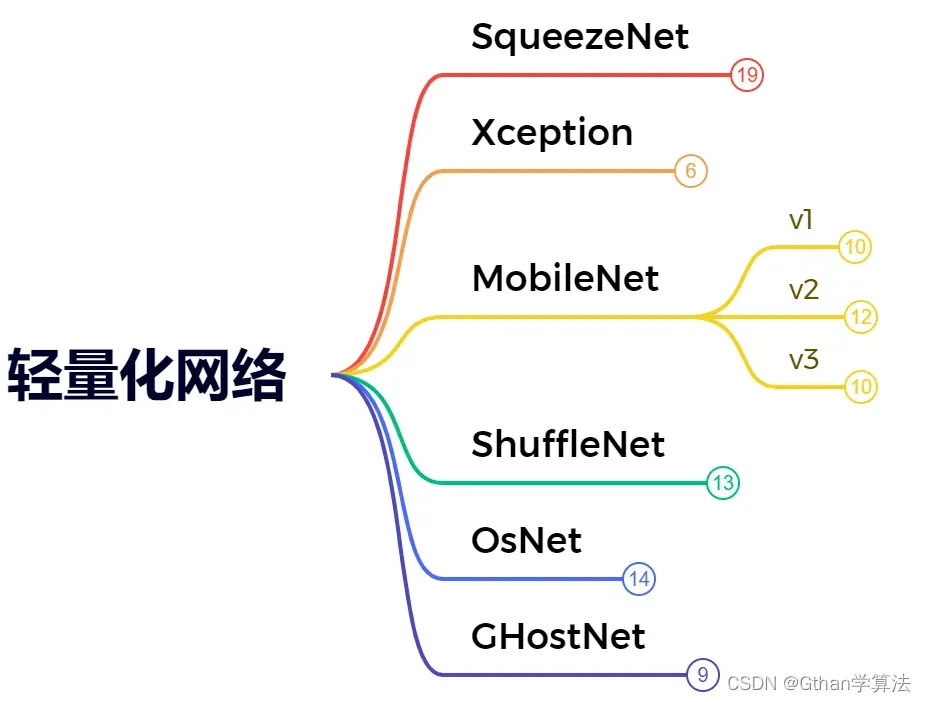

Re-Identification (ReID) is a module that determines whether targets appearing at different times belong to the same object using a corresponding model. To reduce ID-Switch in multi-object tracking problems, the ReID module extracts appearance semantic features of corresponding target frames for similarity calculation in subsequent matching cascades. The ReID module is independent of the object detection and trajectory tracking modules. To meet the real-time requirements of multi-object tracking, lightweight network models (e.g., OsNet) are adopted.

To ensure real-time performance in multi-object tracking, lightweight network models are commonly used. The core of lightweight network models is to optimize the network from model size and inference speed aspects while maintaining accuracy. Currently organized lightweight network models include: SqueezeNet, Xception, MobileNet, ShuffleNet, OsNet, and GHostNet.

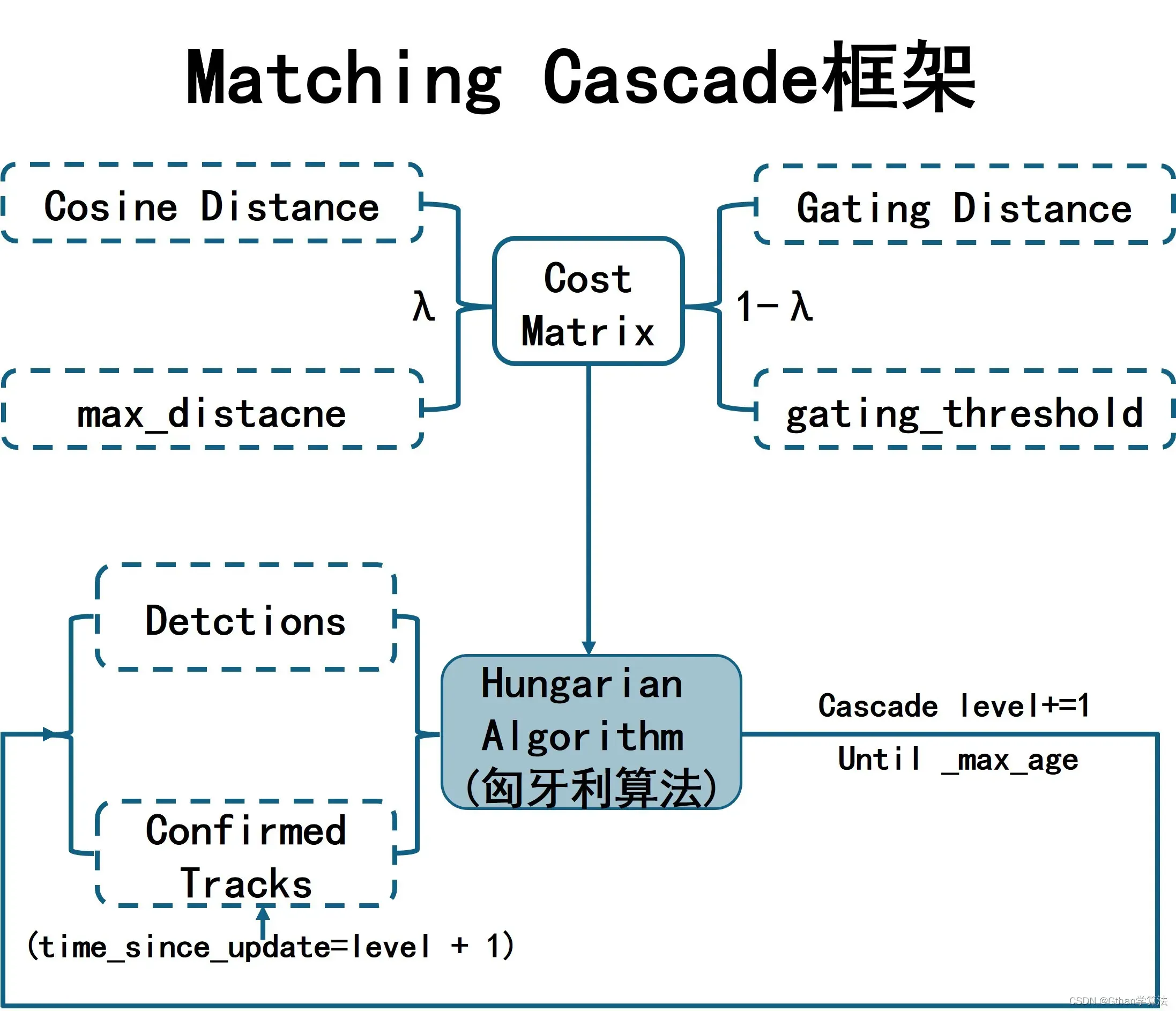

Matching Cascade and IOU Matching

Matching cascade and IOU matching are used for associating trajectories and target frames, encapsulated in the _match function of the Tracker class.

Matching cascade and IOU matching encapsulated in the Tracker class are used to associate trajectories and target frames. The results include successfully matched trajectories and target frames, failed matched trajectories, and failed matched target frames. Post-processing is performed based on the corresponding association results, combined with the core code of the Tracker class.

In matching cascade, the distance metric function (metric) that associates trajectories and target frames is encapsulated in the NearestNeighborDistanceMetric class.

Matching Cascade calculates the cost matrix by weighting the gated distance matrix (motion features) and the appearance semantic feature distance matrix (appearance features). Both the gated distance and the appearance semantic feature distance are limited by corresponding thresholds. The matching process associates target frames and trajectories layer by layer according to the maximum matching cascade depth (same as _max_age), prioritizing trajectories with fewer matching failures and postponing those with more failures. Matching cascade can retrieve reappeared targets after occlusion and reduce ID-Switch.

IOU matching uses the IOU matrix as the cost matrix. The IOU calculation is completed by dividing the overlapping area of the trajectories and target frames by the total area. The specific implementation is in the iou_matching module, which can be referred to in the source code. The matching process directly associates the corresponding trajectories and target frames.

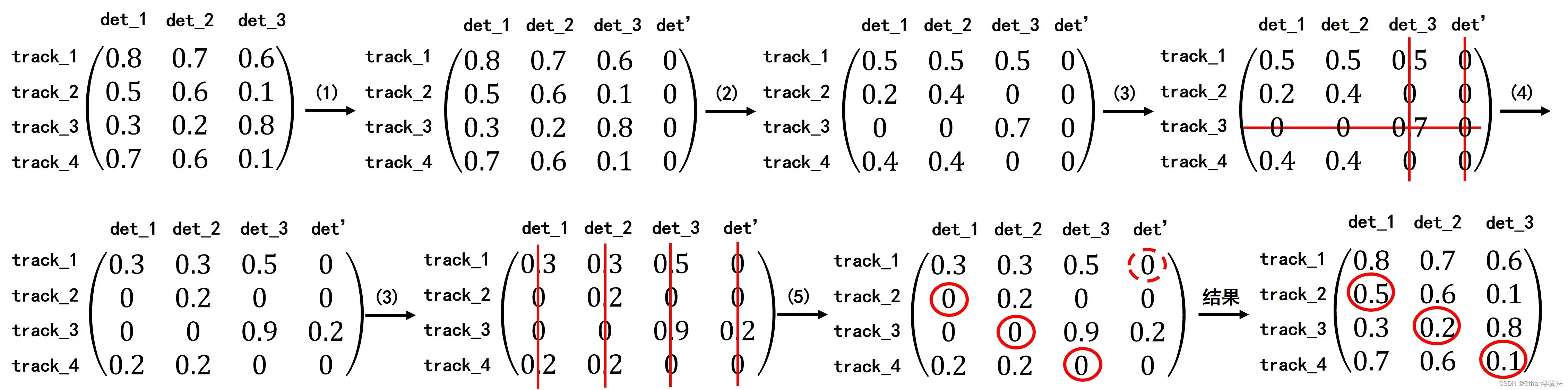

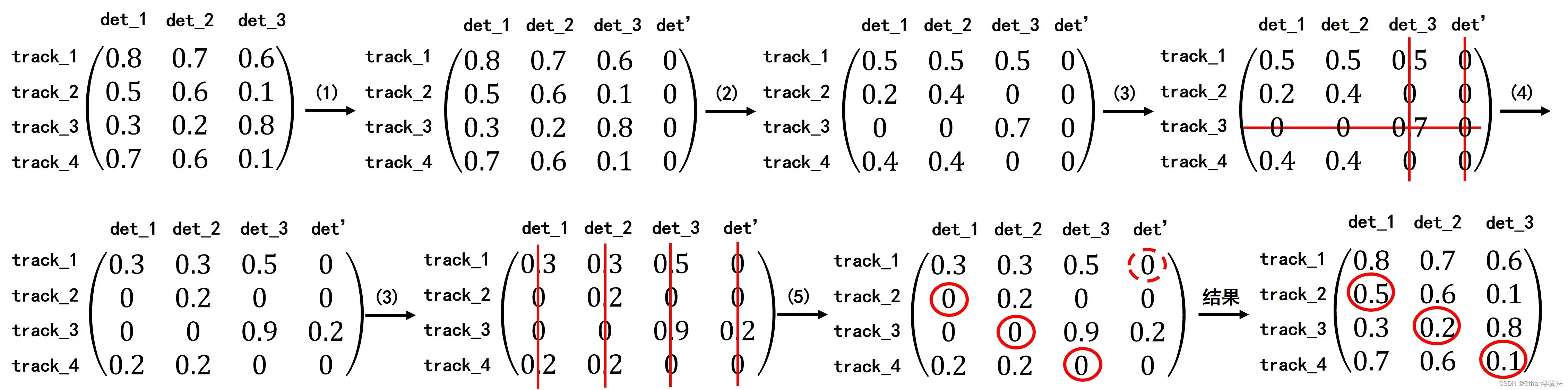

Hungarian Algorithm

The Hungarian algorithm finds the optimal matching (minimum cost) for the allocation problem based on the cost matrix.

According to the theorem:

Adding or subtracting a number from the rows or columns of the cost matrix will yield the same optimal matching for the new cost matrix as for the original cost matrix.

Algorithm steps:

- If the cost matrix is not square, pad it with zeros to convert it into a square matrix.

- Subtract the minimum value of each row from each row of the cost matrix, then subtract the minimum value of each column from each column.

- Cover all zero elements in the cost matrix with the fewest horizontal or vertical lines.

- Find the minimum value of the uncovered elements in step 3, subtract it from the uncovered elements, and add it to the elements at the intersection points of the covering lines.

- Repeat steps 3 and 4 until the number of covering lines equals the dimension of the corresponding square matrix.

Process Demonstration (assuming the cost matrix dimension is 4×3):

DeepSort official repository: https://github.com/nwojke/deep_sort

Deep SORT Multi-Object Tracking Algorithm Code Analysis (Part 1 and Part 2):

https://zhuanlan.zhihu.com/p/133678626

https://zhuanlan.zhihu.com/p/133689982

Learning Videos on Bilibili

Computer Vision Practice: Smart Transportation Project DeepSort+YOLO Explanation

https://www.bilibili.com/video/BV1Yv4y1S7XH/Yolov7-tracker Multiple Trackers

https://www.bilibili.com/video/BV1zT411A7oy/

https://github.com/JackWoo0831/Yolov7-tracker

ByteTrack Tracker: https://www.51cto.com/article/743776.html

Terminology

These three parameters are commonly used in the field of computer vision, especially in object detection and tracking.

NMS_MAX_OVERLAP: Non-maximum suppression threshold, set to 1 means no suppression.

Non-maximum suppression (NMS) is a technique commonly used in object detection to remove redundant overlapping boxes. Specifically, when multiple predicted boxes highly overlap and all predict the same object, we need a method to select the best one among them and suppress (or delete) the other boxes.

“NMS_MAX_OVERLAP” is a threshold used to decide when to suppress a predicted box. For example, if the overlap between two predicted boxes (measured by Intersection over Union, IOU) exceeds this threshold, the one with the lower score will be suppressed.

When “NMS_MAX_OVERLAP” is set to 1, it means that two predicted boxes must overlap completely (IOU = 1) to suppress one, which is essentially equivalent to not performing non-maximum suppression.

MAX_IOU_DISTANCE: Maximum IOU threshold

This parameter is likely related to object tracking, especially in tracking algorithms like SORT or DeepSORT. In object tracking, we want to track objects from one frame to the next.

“MAX_IOU_DISTANCE” is a threshold that defines how different the IOU between two objects can be while still being considered the same object. If the IOU between two objects exceeds this threshold, they may be considered different objects.

Overall, these two parameters involve calculating the overlap between predicted boxes or objects and performing certain operations (such as suppression or matching) based on this overlap.

NN_BUDGET: Maximum number of feature frames to save; if exceeded

, it will perform rolling saves.

This means that when the number of features saved for a target exceeds the number specified by “NN_BUDGET”, the oldest features will be removed to make room for the newest features. This is similar to a fixed-size queue or rolling buffer.

Model Changes

objdetector.pyencapsulates an object detector for detecting objects in videos.

To change the model, you only need to modify the following parts given in objdetector.py:

1 | OBJ_LIST = ['person', 'car', 'bus', 'truck'] |

CN

项目

1、基于yolov5与Deep Sort的流量统计与轨迹跟踪

https://blog.csdn.net/JulyLi2019/article/details/124047020

2、一个不错的项目:https://github.com/mikel-brostrom/yolo_tracking 5.3K Star

遇到的问题及解决方案:

要使用detector(im)来获得dets的值,你需要有一个对象检测器(detector)的实例,该实例应该能够接受图像(im)作为输入,并输出检测到的物体的边界框、置信度和类别。如果你已经有了一个能用的对象检测器,可以替换硬编码的dets值。

一般来说,你需要做以下几步:

1. 导入你的检测器

你可能需要导入一些库或模块,这取决于你使用的对象检测器。例如,如果你使用的是YOLO检测器,你可能需要导入相关的库或模块。

1 | from my_detector import MyDetector # 请根据你的实际情况修改 |

2. 初始化检测器

在循环开始前,你需要创建一个检测器实例。通常,你会需要提供一些参数,例如模型权重的路径、设备类型等。

1 | detector = MyDetector(…) |

3. 运行检测器并获取检测结果

在主循环中,你需要用当前帧im作为输入运行检测器,然后获取dets。

1 | dets = detector(im) |

下面是一个假设的完整代码片段,假设detector(im)返回一个NumPy数组,其形状和原硬编码的dets一致:

1 | pythonCopy codefrom my_detector import MyDetector # 修改为你的实际导入 |

注意:

detector应该是一个能够接收图像输入并输出检测结果的对象,detector(im)应该返回一个数组,其中包含检测到的对象的边界框、置信度和类别。在实际代码中,你可能需要对检测器的输出进行一些处理,以使其符合

DeepOCSORT跟踪器的输入要求。

3、YOLOv5+Deepsort实现车辆行人追踪和计数:https://github.com/Sharpiless/Yolov5-deepsort-inference

4、deepsort目标跟踪、ROI计数、绘制轨迹:https://codeantenna.com/a/vaIQfAGKoj

多目标跟踪原理

多目标跟踪(MOT)–DeepSort原理:https://aitechtogether.com/article/40878.html

对比Sort的主要改进:利用ReID模型提取外观语义特征,加入外观信息;增加了级联匹配(Matching Cascade)和轨迹确认(Confirmed、Tentative、Deleted)

DeepSort主要模块:

(1)目标检测模块:通过目标检测网络,获取输入每一帧图片中的目标框

(2)轨迹跟踪模块:通过卡尔曼滤波进行轨迹预测和更新,获取新的轨迹集合

(3)数据匹配模块:通过级联匹配和IOU匹配将轨迹和目标框关联

DeepSort主要流程:检测器获取视频当前帧中目标框 -> 卡尔曼滤波根据当前帧的轨迹集合预测下一帧轨迹集合 -> 预测轨迹与下一帧检测目标框进行匹配 -> 卡尔曼滤波更新匹配成功的轨迹

-

-

流程分析

整个流程概括如下:

(1)将第一帧检测目标框初始化对应轨迹进行卡尔曼滤波预测下一时刻轨迹,其中初始化轨迹状态为不确定态

(2)将上一时刻确认态轨迹与当前时刻检测目标框进行级联匹配,级联匹配结果中匹配失败轨迹和匹配失败目标框用于后续IOU匹配,匹配成功轨迹和目标框进行卡尔曼滤波预测和更新

(3)将级联匹配结果中匹配失败轨迹和匹配失败目标框以及上一帧不确定态轨迹进行IOU匹配,匹配结果中匹配失败轨迹若仍为不确定态或为确定态但连续匹配失败次数超标则删除该轨迹;匹配失败轨迹为确定态且连续匹配失败次数未超标进行卡尔曼滤波预测;匹配失败目标框则初始化对应轨迹进行卡尔曼滤波预测;匹配成功轨迹和目标框进行卡尔曼滤波预测和更新

(4)重复(2)和(3),直到结束

目标检测模型概述

目前整理了有关基于深度学习的目标检测模型的发展路线:

首先以Anchor-based为基础的Two-stage模型因其检测速度慢的问题逐渐发展为One-stage模型。然而由于Anchor的设计存在超参数,难以覆盖所有尺寸目标;同时为了保证一定的精度,选择了大量的Anchor用来检测占用计算量和内存消耗。

后来以Anchor-free为基础的模型提出以检测关键点(中心点)的方式解决Anchor存在的问题,大大优化了模型超参数的数量。此外大多数目标检测模型需要对预测结果进行非极大值抑制(NMS)后处理,对此提出了NMS-free模型皆在取消NMS后处理,提高检测速度,实现真正意义上的端到端检测。

目前Transformer架构在视觉领域(ViT,Swin Transformer)取得了一系列成功。自此以Transformer为基础的目标检测模型展现出了优越的性能并且基于Transformer的模型能够天然的消除人工设置的Anchor-based以及NMS后处理是一个优良的端到端检测器。

轨迹跟踪模块

轨迹跟踪模块主要通过Track类和Tracker类完成,具体功能如下:

Track类:存储单个轨迹的状态和信息以及负责单个轨迹的预测和更新

Tracker类:存储所有轨迹(集合)的状态和信息,负责轨迹的初始化以及所有轨迹(集合)的预测和更新同时封装了数据匹配模块

Track类

Track类是轨迹的一个基本单位,通过mean、covariance存储轨迹的位置和速度信息,利用卡尔曼滤波对轨迹信息进行预测和更新;同时定义了轨迹的三种状态(Tentaitve、Confirmed、Deleted)及三种状态之间的转换关系。其中三种状态如下:

Tentaitive态:代表轨迹初始(默认)状态,该状态轨迹更新时连续匹配成功次数(hits)+1,达到指定匹配成功次数(_n_init默认为3)转换为Confirmed态;经过级联匹配和IOU匹配后该轨迹状态仍为Tentaiva,则认为轨迹没有匹配上任何目标,转换为Deleted态。

Confirmed态:代表轨迹匹配成功状态。该状态轨迹预测而不更新时连续匹配失败次数(time_since_update)+1,达到连续匹配失败次数(_max_age默认为70)转换为Deleted态。

Deleted态:代表轨迹失效状态。该轨迹从所有轨迹(集合)中删除。

Tracker类

Tracker类是整个DeepSort框架的核心部分,整合了所有轨迹的信息和状态并逐一通过卡尔曼滤波对轨迹信息进行预测。由于在轨迹集合更新过程中涉及数据匹配模块,Tracker类的update函数封装了级联匹配和IOU匹配,根据对应匹配结果结合卡尔曼滤波更新轨迹集合中所有轨迹的状态和信息并且针对未匹配的目标(以及第一帧目标框)初始化轨迹状态和信息。该部分结合级联匹配和IOU匹配以及DeepSort框架图阅读。

卡尔曼滤波

卡尔曼滤波主要包含预测和更新。其中预测是根据系统当前状态对系统的下一步状态做出有根据的预测;更新是通过测量值与预测值综合计算出系统状态的最优估计值。具体可参考详解卡尔曼滤波原理博客,其中引用表达式:

预测:

更新:

-

-

数据匹配模块

数据匹配模块主要通过级联匹配和IOU匹配以及匈牙利算法来完成对轨迹和目标框的关联匹配,具体功能如下:

级联匹配:使用(ReID模块提取)外观特征结合运动特征计算相似度,通过匈牙利算法进行关联匹配

IOU匹配:使用轨迹和目标框面积计算IOU,通过匈牙利算法进行关联匹配

ReID模块

重识别简称为ReID,是利用对应模型判断不同时间段出现的目标是否属于同一对象。多目标跟踪问题为减少ID-Switch引入ReID模块提取对应目标框的外观语义特征,供后续级联匹配中计算相似度使用。其中ReID模块是独立于目标检测和轨迹跟踪模块,源代码中为满足多目标跟踪的实时性采用轻量化网络模型(OsNet等)。

其中ReID模块为保证多目标跟踪的实时性,多采用轻量化网络模型。轻量化网络模型的核心是在保持精度的前提下,从模型大小和推理速度两方面对网络进行轻量化改造。目前整理了有关轻量化网络模型有:SqueezeNet、Xception、MobileNet、ShuffleNet、OsNet以及GHostNet。

级联匹配和IOU匹配

级联匹配和IOU匹配用于轨迹和目标框的关联,封装于Tracker类中的_match函数中.

级联匹配和IOU匹配封装于Tracker类中用于轨迹和目标框的关联,结果为匹配成功轨迹和目标框、匹配失败轨迹以及匹配失败目标框,依据对应关联结果进行相应的后处理,结合Tracker类核心代码一起阅读。

级联匹配中关联轨迹和目标框的距离度量函数(metric)封装在NearestNeighborDistanceMetric类

级联匹配使用门控距离矩阵(运动特征)和外观语义特征距离矩阵(外观特征)加权计算代价矩阵,其中门控距离和外观语义特征距离都通过对应的阈值限制过大的值。匹配过程根据最大级联匹配深度(跟_max_age相同)逐层进行目标框与轨迹的关联,即根据连续匹配失败次数(time_since_update)与匹配深度对应,实现匹配失败次数少的轨迹优先匹配,失败次数多的轨迹靠后匹配。通过级联匹配,可以重新将被遮挡后重现的目标找回,降低ID-Switch。

IOU匹配使用IOU矩阵当作代价矩阵,其中的IOU计算就是通过轨迹和目标框的重叠面积除以总面积来完成,具体实现在iou_matching模块中,可参考源码。匹配过程根据对应轨迹和目标框直接进行关联。

匈牙利算法

匈牙利算法基于代价矩阵找到最小代价的分配方法,是解决分配问题中最优匹配(最小代价)的算法。

其中依据定理:

代价矩阵的行或列同时加或减一个数,得到新的代价矩阵的最优匹配与原代价矩阵相同。

算法步骤:

(1)若代价矩阵不为方阵,则在相应位置补0转换为方阵

(2)代价矩阵每一行减去此行最小值后每一列减去此列最小值

(3)用最少的横线或竖线覆盖代价矩阵中的所有0元素

(4)找出(3)中未被覆盖元素的最小值,且未被覆盖元素减去该最小值;对覆盖直线交叉点元素加上该最小值

(5)重复(3)和(4),直到覆盖线的数量等于对应方阵的维度数

过程演示(假设代价矩阵维度4×3):

deepsort官方库:https://github.com/nwojke/deep_sort

Deep SORT多目标跟踪算法代码解析(上、下)

https://zhuanlan.zhihu.com/p/133678626

https://zhuanlan.zhihu.com/p/133689982

B站学习视频

1、计算机视觉实战:智慧交通项目 deepsort+yolo讲解

https://www.bilibili.com/video/BV1Yv4y1S7XH/

2、Yolov7-tracker 多个跟踪器

https://www.bilibili.com/video/BV1zT411A7oy/

https://github.com/JackWoo0831/Yolov7-tracker

ByteTrack跟踪器:https://www.51cto.com/article/743776.html

名词解释

这三个参数常用于计算机视觉领域,特别是在目标检测和追踪中。

NMS_MAX_OVERLAP: 非极大抑制阈值,设置为1代表不进行抑制

非极大抑制(NMS,Non-Maximum Suppression)是目标检测中常用的一种技术,用于去除多余的重叠框。具体来说,当多个预测框高度重叠,并都预测同一个物体时,我们需要一个方法来选择其中一个最佳的预测框并抑制(或删除)其他的预测框。

“NMS_MAX_OVERLAP” 是一个阈值,用来决定何时应该抑制一个预测框。例如,如果两个预测框之间的重叠度(以Intersection over Union, IOU来度量)大于这个阈值,那么得分较低的那个预测框会被抑制。

当 “NMS_MAX_OVERLAP” 设置为1时,这意味着两个预测框的重叠度必须完全为1(完全重叠)才会抑制其中一个,这实际上是等同于不进行非极大抑制。

MAX_IOU_DISTANCE: 最大IOU阈值

这个参数可能与目标追踪有关,特别是在如SORT或DeepSORT等追踪算法中。在目标追踪中,我们希望跟踪从一个帧到下一个帧的物体。

“MAX_IOU_DISTANCE” 是一个阈值,它定义了两个目标之间的IOU可以有多大的差异,并仍然被认为是同一个目标。如果两个目标之间的IOU超过这个阈值,那么它们可能会被视为不同的目标。

总的来说,这两个参数都涉及到计算预测框或目标之间的重叠度,并基于这个重叠度来进行某些操作(如抑制或匹配)。

NN_BUDGET:最大保存特征帧数,如果超过该帧数,将进行滚动保存

这意味着当为一个目标保存的特征数量超过”NN_BUDGET”指定的数量时,最旧的特征将被移除,以为最新的特征腾出空间。这类似于一个固定大小的队列或滚动缓冲区。

模型更改

objdetector.py封装的一个目标检测器,对视频中的物体进行检测

若需要更改模型,只需要更改objdetector.py下面的给出的部分:

1 | OBJ_LIST = ['person', 'car', 'bus', 'truck'] |